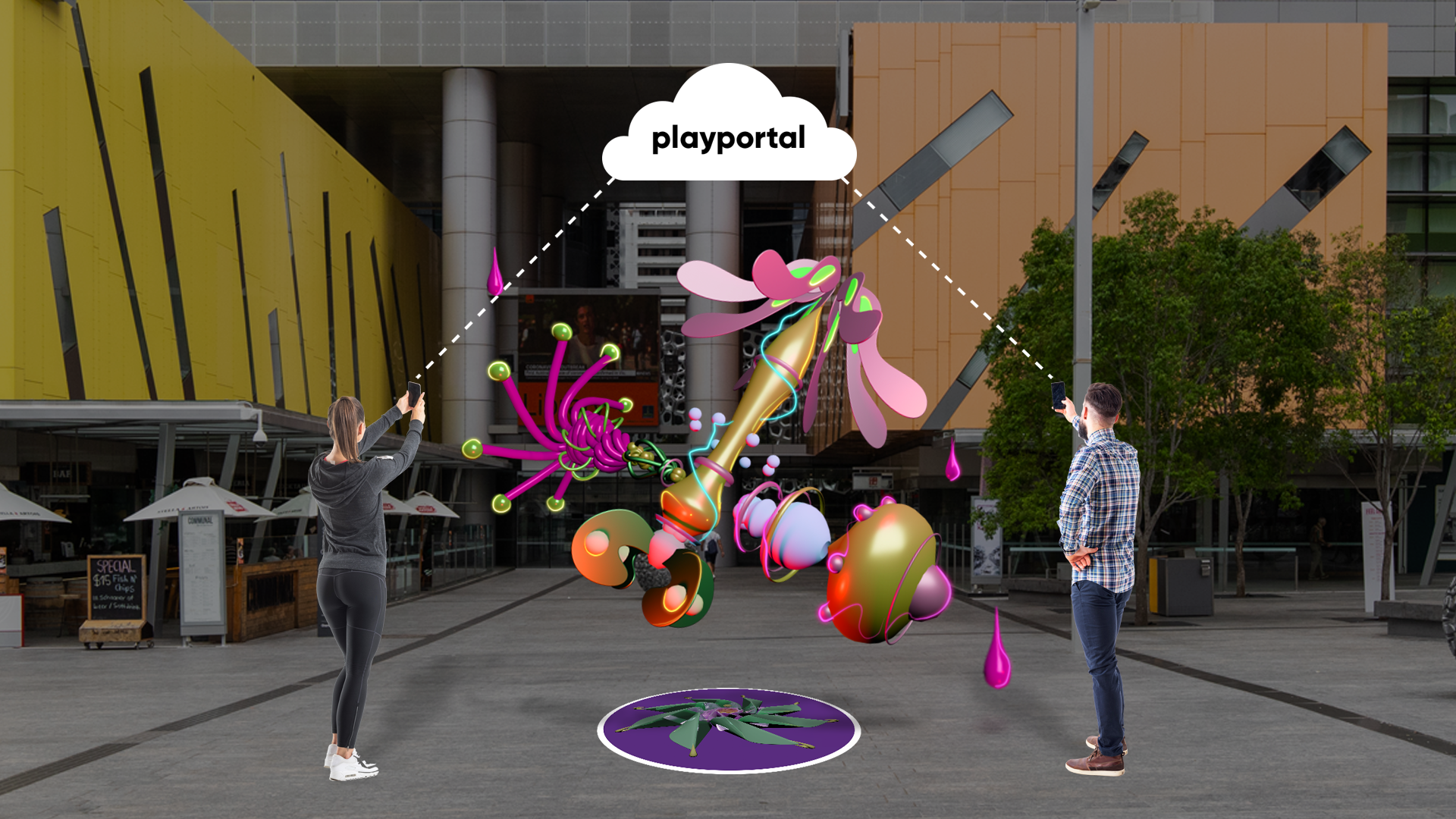

Artificial Ecology was built using Play Portal, our platform now enabling thousands to interact together with live events from anywhere! Check out a live demo of how it works here!

Code on Canvas was commissioned by EyeJack as their Creative Technology Partner to bring Artificial Ecology to life at the Curiocity Festival in Brisbane. The vision for the activation is to bring people together in public spaces where they can collaborate in a virtual AR environment and build a digital ecosystem together.

If you are interested in finding out more about Artificial Ecology and how to commission it for an event or festival, please Contact EyeJack.

How It Works

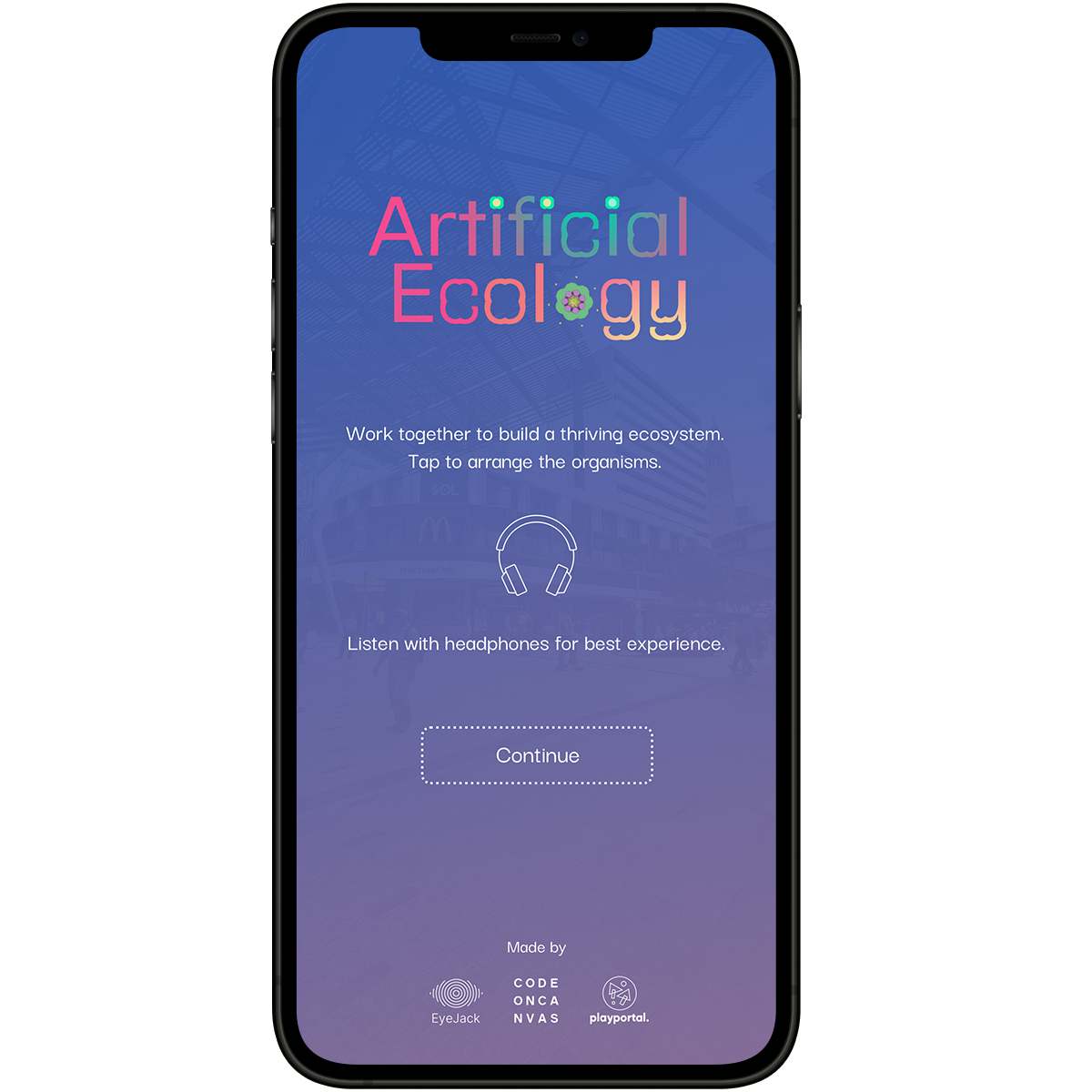

The Artificial Ecology ecosystem consists of a selection of moveable 3D AR organisms. Multiple users can touch their screens to move the organisms toward each other and their proximity to each other will trigger various events; changing colours, pulsing, deforming and emitting ambient sounds.

The purpose of this interactive artwork is to encourage visitors to work collaboratively to create different configurations of the artwork. Together they discover how the organisms react to one another and what configuration makes their ecosystem thrive.

AR Localisation

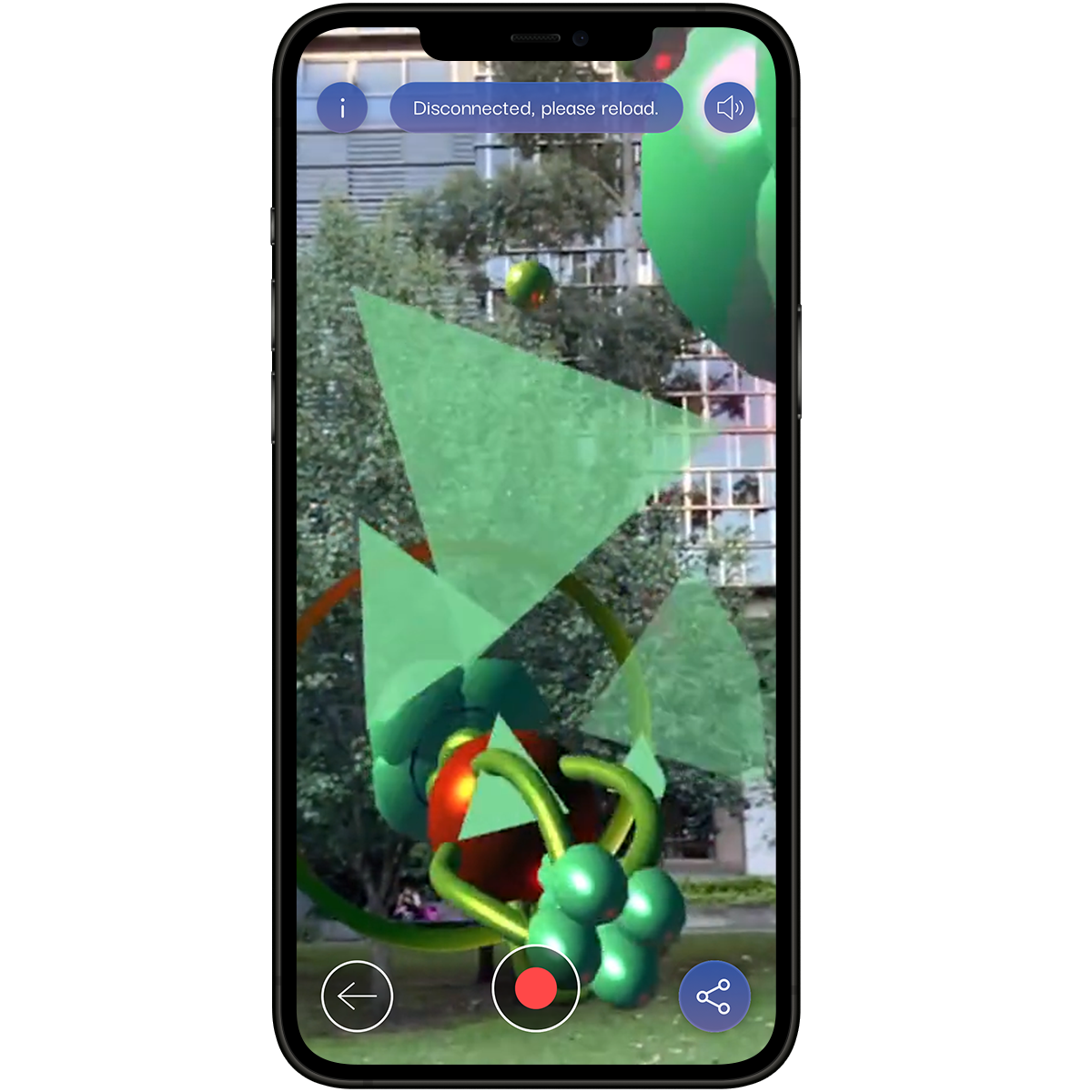

AR looks great when the tracking is good and things appear where they are supposed to be, but sometimes AR content can drift. AR tracking is mostly dependent on the phone camera and the visible feature points that can be detected and tracked in the camera image. But let's say you are pointing your phone camera at a blank white wall which essentially has no feature points to track and this is when the phone loses its orientation and the AR content starts to drift.

One good way to improve AR world tracking is to introduce image targets into the environment, so when the camera sees the printed image target, it can use it to localise the experience. It’s kind of like knowing the exact dimensions of a room and looking at a painting on the wall in front of you. Since you can see the painting and know exactly where it is located on the wall, then you should be able to work out your location in the room.

Artificial Ecology uses 5 printed floor decals to help localise the experience and prevent drift. If a phone camera sees one of the printed floor decals, it can localise and realign the AR experience to where it’s meant to be.

Multiplayer Interaction

The user interaction and collaboration is driven behind the scenes by our PlayPortal platform. Users are automatically connected via our cloud service to the same virtual space, which is then rendered from their unique perspective just like any online multiplayer game - only in AR whilst at the same physical location! Users then interact within the one space together, some organisms even being so large and heavy to move that it goes quicker when they work together.

Artificial Ecology is rich in sound as well as visuals. We recommend ear / headphones to get the most out of the experience.

Social Sharing is made easy with familiar buttons for capturing images and recording videos with support for all social platforms that a user is connected to on their phone.